How can we increase SEO traffic in 30 days?

The following is a 30-day plan for increasing website traffic using SEO:

Table of Contents

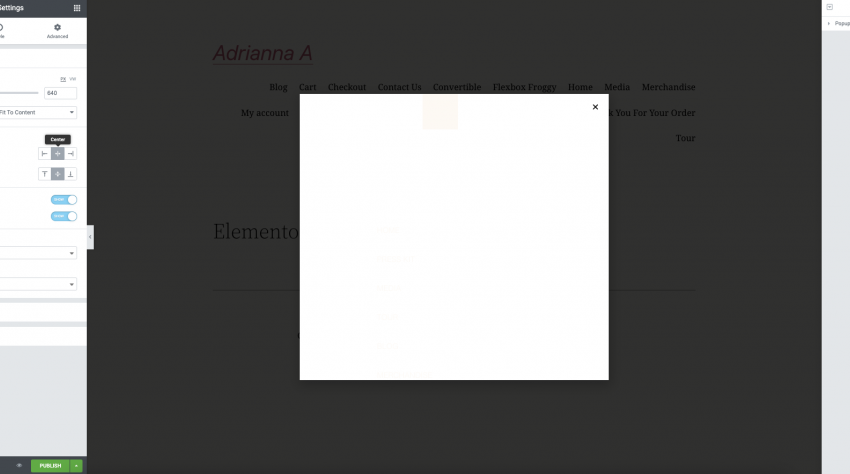

Day 1 – Titile & Description Optimisations

Make sure your title and description are optimized. The snippet on the Google Search results page is the first item a person sees when searching for a term. Your page title, URL, and meta description are all included in the sample. The title should be brief and to the point. It has an effect on your keyword ranking. The content on your website should be appropriately described in your meta description. While it has no direct effect on your search ranking, it does have an effect on your click-through rate! Practical suggestions for title optimization Keep the title to a minimum. Anything more than 70 characters is truncated by Google. In the page title, include keywords that you wish to rank for. Add page titles to any pages that are currently missing them!

Practical suggestions for improving the description Your description should be no more than 175 characters long. Otherwise, Google will remove the remainder of your content! Incorporate a call-to-action in your description to entice people to visit your website page. What benefit does a user receive from visiting your website? Make sure it’s stated clearly in the description. Important keywords should be used.

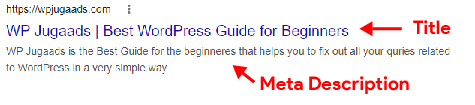

Day 2 – Image Optimization

Optimize Images using ALT Attributes Without textual help, search engines are unable to completely grasp the content of photographs. That’s why it’s crucial to include Alt text to explain your images: it helps search engines comprehend what they’re looking at. If a picture is unable to load for whatever reason. The Alt property (alternative description) shows the alternative text. Visually challenged people who utilize text-to-speech software can also use alt text to access web information. After all, you must optimize for all audience members! In the HTML source code, the Alt property is included in the image link:

Practical advice on how to use Alt characteristics Check your website to see if you’ve added all of the ALT attributes to any photos that are already available. Every picture on your website should include an ALT property. For the ALT messages, include crucial keywords. Use the ALT text to describe what the associated photos depict.

Day 3 – Fixing Broken Links

Find and fix any broken links A user’s browser displays error code 404 when they visit a URL that cannot be located on a server (file not found). This not only produces a terrible user experience. But also causes search engine crawling to be disrupted.

If your website has a lot of 404 errors, search engines will interpret this as a hint that its maintenance is not well.

This usually happens when the destination URLs have been modified or poorly typed.

Practical advice: 404 errors must be resolved. Examine your website for any 404 problems. For WordPress, you may utilize the Broken Link Checker plugin. 301 redirects should be used to redirect the problematic URLs to the proper URLs. Request that any broken links to your website be fixed by other webmasters. Look through the links in the navigation menu.

Day 4 – Analyze Redirects

Review Your Redirects on Day 4 Certain URLs may need to be temporarily redirected due to server relocation. These redirects (status code 302) guarantee that Google indexes the old URLs. Allowing visitors to access them even after the server is relocated. A 302 redirect should only be used for a short length of time. Use the status code 301 to permanently redirect a URL, which redirects the old URL to the new URL. In the process, some of the link juice is also sent on.

Check all of the redirects on your website for errors. Examine if existing 302 redirects are indeed essential, or whether they should be replaced with 301 redirects.

Day 5 – URL Structures Regulations

Make your URL structures consistent. The URLs on your website serve as road signs to the content that your visitors want to see. Users can get to their destination faster if the website structure is more consistent. Bounce rates are reduced and stay duration is increased when users have a nice experience. A consistent URL structure also aids search engines in crawling your page more quickly. On its limited budget for crawling each website, the faster bots can reach all URLs, the more pages they can go through and index. A consistent directory structure also necessitates the use of descriptive URLs. These aid users in finding their way through your website. Because the URLs already contain indications of the contents of the landing page, descriptive URLs are also suitable for marketing actions or sharing material on social media.

Day 6 – Shorter URLs

Make your URLs shorter Google can handle URLs that are up to 2,000 characters long with ease. This indicates that the length of your URL has no impact on the ranking of your site page. However, the length of a URL has an effect on the user experience (which ultimately still affects your SEO). Shorter URLs are easier to remember, post on social media, and advertise with. Another advantage is that a short URL of no more than 74 characters presents in its entirety is in the Google SERP snippets. In the URL, avoid using redundant stop words (the, a, an, etc.) or conjunctions (and, or). Keep your URLs as near to the root domain as feasible.

Day 7 – Internal Pages Linking

Create internal links between your pages. Your homepage is the most significant and, most likely, the most powerful page on your website. From the homepage to all other subpages, link power (also known as link juice) is spread. Internal links and easy navigation menus utilize to spread link juice evenly to all other subpages. You may also control the search engine bot using consistent internal linking. A logical link structure allows the bot to crawl and index your website in a methodical manner.

Controlling link power also informs the bot about the most significant pages. Some of your website’s pages are not be connected to any other sites. These are known as “orphaned pages,”. And if a bot encounters one, it forces it to stop crawling since bots can only go from link to link. Practical advice Remove any links that lead to malfunctioning pages (404 error) or pages that are no longer available (status code 500). Orphaned pages should be identified and linked to other thematically similar subpages.

Day 8 – Anchor Texts

Increase the relevancy of your content by using anchor texts. Anchor texts give a detailed description of a link and tell the user what to anticipate from it. Anchor texts allow visitors to click on keywords they are familiar with and it forwards to the URL concealed behind the anchor text, rather than having to click on an unsightly URL. Internal links should ideally always utilize the destination page’s related keyword in the anchor text.

The more web pages that utilize the same keyword to link to a subpage, the more signals the search engine will get that this landing page is particularly relevant for this phrase. As a result, the page will be ranked higher for this and other related keywords. Avoid utilizing non-descriptive anchor phrases in your internal links (e.g., “here,” “more,” etc.) and instead concentrate on keywords. Advice from the field When linking to a landing page, try to utilize the same anchor text. Make sure the anchor text corresponds to the landing page’s content.

Day 9 – Shorten Click Paths

Continue to Click Paths SHORT Users that visit a website want to get to their selected page as quickly as feasible. That implies your click routes should be as short as feasible. A user’s click path is the journey they follow to get to their intended page. Consider a purchasing experience on the internet. A person may begin on the site and wind up in the shopping cart. Their click route is the number of pages and simplicity with which they may access and purchase their preferred product. The length of the click path is important for your website’s navigation. When crawling, search engines benefit from short click pathways as well. If the Google bot is able to visit all sub-pages of your website with just a few clicks, it may utilize its crawl budget to scan and index more pages. Click-through-rate optimization

Day 10 – Site’s Accessibility

Improve your site’s accessibility Troubleshooting technical faults and ensuring that your website is constantly accessible is one of the most difficult challenges to overcome when considering long-term SEO strategies. A sitemap.xml file may be used to notify search engines about all of the URLs on your website. Search engines can read this sitemap, which contains a list of all important URLs and metadata on the website. Google bot uses this list to visit the website and examine the related URLs. The sitemap.xml file always has the same structure: it specifies the XML version as well as the code. Additional metadata [e.g., title] can be added to the URLs.

Different content management systems can be used to build the sitemap.xml. After you’ve finished producing the file, you’ll need to upload it to Google Search Console. The sitemap-XML is then checked for correctness by Google. However, there is no assurance that the sitemap will be scanned. And indexed for all of the websites included. It is up to the search engine to decide. Practical advice Update your sitemap.xml on a regular basis. Whenever you alter URLs or edit content, be sure to update your sitemap.xml. Using the sitemap, check the status codes of the web pages and correct any accessibility issues.

Also Read These:

- 7 Best Domain Registrars you should try in 2022

- Top 08 Best Amazon Affiliate WordPress plugins in 2022

Day 11 – Website Crawling

Tell the search engines what to look for. The robots.txt file instructs search engine crawlers which folders to crawl (allow) and which to ignore (deny) (disallow). Before crawling the website, every bot must first consult the robots.txt file. The robots.txt file ensures that search engines recognize all of your website’s key material. Search engines will not be able to properly index your website if crucial web pages or JavaScript parts are not crawling. If all of your content is indexing, your SEO traffic will increase! There are no limits on crawling. You should save the robots.txt file in the root directory of your website after creating it.

If you don’t want a certain part of the website to be visible, If you don’t want a certain section of your website crawled, use a “disallow” in the file to define it. User-agent: * User-agent: * Disallow: /this directory. Hands-on tips Use a robots.txt file to give instructions to search engines. Make sure that important areas of your website are not excluded from crawling. Regularly check the robots.txt file and its accessibility.

Orientation & performance of the website. Your website should be thematically oriented to specific keywords for optimal rankings. The website should fulfill the needs of your users. At the same time, it is also important for your web content to load quickly in order to guarantee user satisfaction.

Day 12 – Keyword Research

Investigate Keywords Keyword research aids in the identification of terms that appeal to a certain audience, allowing your material to reach a wider audience. Keep your website’s objective in mind while choosing keywords. If your website’s primary goal is to generate revenue, use transactional keywords; if it’s to deliver valuable information to viewers, use informative keywords. The following are some of the tools we recommend for finding relevant keywords:

The Google Keyword Planner is a feature of Google’s AdWords advertising service. To utilize this free service, you must have an active AdWords account. As soon as you join, you may begin searching for keywords and relevant ideas. You may also enter websites and see what keywords are appropriate for their content. In addition, the tool provides data on the monthly search volume. Returning to the Keyword Planner.

Google Trends: This free tool displays the frequency with which popular search phrases are utilizing. The tool also gives you a sneak peek at potential peak demand. For seasonal and event-related keywords, Google Trends is ideal.

Google Search Console: When you go to Google to search for anything, Google gives you suggestions as you enter based on the most popular terms that your current search matches. This contains suggestions for long-tailed keywords based on your short-tailed entry. Take advantage of this simple option if you’re short on time or money.

Übersuggest is a tried-and-true keyword research tool. It looks through all of Google Suggest’s suggestions and displays the most relevant search phrase.

The finest premium keyword research tools are LongTailPro and SEMRush. They offer several features that will provide you with a comprehensive picture of your keywords. You may give it a shot.

Day 13 – Simple Website Navigation

Ensure that your website’s navigation is easy to use. Users can simply explore and locate what they’re looking for on a website with the aid of a navigation menu. The user experience depends on a well-structured navigation menu. The navigation structure is also crucial for search engines since it allows them to identify the importance of a URL. Your website’s navigation should be organised properly to guarantee that consumers have no difficulty using it.

Long dwell periods from your users may help you boost your search rankings, thus it’s crucial to think about the user experience when it comes to SEO! Practical advice In navigation elements, use anchor messages. This aids search engines in better comprehending the landing page’s content. Determine whether pages have a high bounce rate and take steps to avoid it. To have a better overview, use a breadcrumb navigation.

Day 14 – Site Speed

Day 15 – Mobile Friendly Website

Make your website mobile-friendly. For search rankings, mobile-friendliness is a critical consideration. Users are increasingly using their mobile devices to access the internet. Over 70% of visitors view webpages on mobile in some places. What does this imply for you personally? Every page of your website should be mobile-friendly. Every. One. And only one. You may verify the mobile performance of your website with Google’s free mobile-friendliness test before you start with mobile optimization. Google webmasters can provide you with further information.

Day 16 – Analyze Duplicated Content

Determine if there is any content that is duplicated. For a variety of reasons, duplicate material might exist on a website. The same material can sometimes be found and indexed under several URLs. This makes it difficult for search engines to identify which URL provides the best search result. As a result, the ranks are becoming “cannibalized.” Because Google is unable to identify the best version, the website will not appear in the top ranks.

You should discover duplicate content sources on your website and correct the issues as soon as feasible. Check whether your website is able to access via www., HTTP, or HTTPS. Use 301 redirects to lead visitors to the desired version if numerous versions are available. Check to see if the same material is indexing in multiple formats, such as print or PDF. Check to see whether your website generates duplicate material by automatically creating lists or papers.

Day 17 – Remove Duplicated Content

Remove duplicate material from your website. Duplicate material is a common problem for online stores in particular. A product, for example, might be classified into many categories. A product can be found under many URLs if the URL is arranged hierarchically. Using a canonical tag is one surefire technique to overcome this problem. This informs Google as to which URL is the “original” and which is a duplicate. When the Google crawler crawls your website, it ignores the copies and only indexes the original URLs.

Add a canonical tag to each page on your website. The canonical tag should refer to the original web page in the case of duplicate content. Also, on the original web page, include a canonical tag that references to itself. When inserting canonical tags, double-check that the URLs are accurate. For canonical tags, don’t use relative URLs. If you hired a writer to create the material for your website, double-check your piece before releasing it. To check for plagiarism, I use Grammarly and Copyscape.

Day 18 – Use of TF–IDF

Analyze Content Quality using TF*IDF The uniqueness of a website’s content and the amount of additional value it gives consumers to determine whether or not it achieves high-ranking positions. You may use TF*IDF to see if your website’s text needs to be optimized. Based on the top 10 search results in the SERPs, it calculates the frequency of phrases connected with the supplied primary keyword. The term frequency may then be used to determine if your content already contains the crucial keywords. Incorporate the most essential terms from the analysis into your content in a meaningful way. Compile material for your users that is thematically related. To stay up with changes in the SERPs and shifting user interests, utilize the TF*IDF tool to examine your content on a regular basis.

Day 19 – Unique Headlines

Make headlines that are distinctive. Your website’s headlines generally serve two purposes. One is to provide the text with a logical framework, while the other is to entice the user to continue reading. The HTML source code uses h-tags to indicate headlines. Practical advice Each page should only have one h1 headline. In the h1 headline, include the page’s primary keyword.

Subheadings arranged in a chronological manner (h1, h2, h3, etc.) To format the font size, do not use h-tags. Instead, make use of CSS. Use thematically complimentary terms in subheadings wherever possible (h2, h3, etc.). Keep headlines to a minimum and eliminate any extraneous words. Use components like numbers, bullets, and pictures to draw attention to your text and make it skimmable.

Day 20 – Normalize Content

Compose your material Material curation is a popular method for gathering, reorganizing, and republishing existing content. Users frequently gain new insights on content concepts you’ve already addressed as a result of the gathering of materials. To be effective at content curation, you must first choose acceptable sources and then publish the material on your own site. Personal social media outlets can aid in the easy dissemination of information. Popular themes attract a lot of visitors.

Practical advice Publish illustrative infographics that help others grasp a difficult subject. Publicize surveys and data on issues that your target audience is interesting in. Write e-books in which you thoroughly cover a topic in an easy-to-understand manner. Prepare case studies in which you may discuss your personal experiences. This provides practical insight into your job and skills to your users. Publish guest posts on your blog. External experts can give in-depth knowledge on a topic that your consumers are taking interest in.

Day 21 – Content Recycling

Reuse and recycle your content Webmasters and SEOs may use content republishing to rearrange and refresh material that is already doing well for their target audience. This is what we call historical optimization at HubSpot. You can’t just republish the same item and make it appear new when republishing material. To make the information more relevant for your audience, you should include new figures, examples, current facts, or even new forms. Updating outdated information is frequently rewarded by search engines.

But keep in mind that you’ll only be rewarded if you truly make the information more relevant! Check your website’s KPIs on a regular basis, such as dwell time, traffic, and scroll behavior. Look for your most popular material and make sure it’s up to date. When changing your content, make sure you also change your meta components, such as the title and description. Recycle as much as possible.

Content republishing allows webmasters and SEOs to reorganize and refresh information that is currently doing well for their target audience. At HubSpot, A name with “historical optimization”. When republishing material, you can’t just republish the same item and call it a new one. You’ll need to update the content with new numbers, examples, current facts, or even new formats to make it more relevant for your audience.

Search engines reward you when you update obsolete material. Keep in mind, however, that you will get your reward if you truly enhance the material! On a regular basis, review your website’s key performance indicators (KPIs), such as dwell time, traffic, and scrolling behavior. Check to check if your most popular content is still available. Don’t forget to update your meta components, such as the title and description, when you alter your content.

Day 22 – High Ratio of Content Code

Maintain a high content code ratio. A search engine interprets thin content as a page that doesn’t have much to offer. As a result, the search results for certain web pages are poor. The most common symptom of sparse content is a low content (text) to code ratio. The general guideline is that the quantity of text on a website should not be less than 25% of the total. On your website, make sure there is enough text. Remove superfluous comments and formatting from the original code. Make sure your material is well-structured so that the content is easily readable. When feasible, format with CSS rather than HTML.

Day 23 – Modify Content

Maintain a high percentage of content code. Thin content is visible to a search engine as a page with nothing to offer. As a result, certain web pages’ search results are bad. A low content (text) to code ratio is the most prevalent indication of sparse content. A typical rule of thumb is that the amount of text on a website should not be less than 25% of the total. Make sure there is a contact form on your website.

Day 24 – Internationalize Website

Make your website multilingual. Is your website available in many languages or versions for various countries? Awesome! Don’t forget to tell the search engines. The content of your website will subsequently visible to users in the appropriate country. And language versions by the search engines. Users are promptly led to the chosen version in the SERPs, which increases usability. To distinguish between various language and country versions of multilingual websites, utilize the hreflang-tag.

Incorporate this tag into the website’s section, and be sure to create a different tag for each language version. Structure: Practical advice If a page has a copy in a different language, add a hreflang-tag to it. Each page of your website should include links to all available language versions. In your XML sitemap, provide the hreflang attribute. To refer to other PDFs in different languages, provide a hreflang-tag in the section of HTML documents.

Day 25 – Website Local Search

Optimize your site for local searches. According to a Google survey, more than 80% of people look for retailers and local service providers online before going to them. Because of this, it’s critical to optimize your website for local searches. The internet visit serves as a springboard for new clients and purchases. Practical advice Users frequently use smartphones to look for local retailers, restaurants, and service providers, so make sure your website is mobile-friendly. On your website, always use the same business name, address, and phone number (NAP). For entries in business directories, use the same information.

Google MyBusiness is a service that allows you to register your website. Aside from the NAP info, be sure to include photographs of both you and your business. Make sure your material is relevant to your area. In H1 headlines, titles, and meta descriptions, include the city or area. As the main keyword, use your service or company field and intelligently mix it with your location or city. Set the keyword emphasis (ALT attributes or URL names) to the city/region + your offer/service combo. Mark the NAP data in the source code with markups. Add your company to online directories such as Yelp. Encourage your customers to provide reviews on your website.

Day 26 – Connect Social Media

Use your service or company field as the main keyword, and carefully blend it with your location or city. Set the city/region + your offer/service combo as the keyword emphasis (ALT attributes or URL names). Markups are used to indicate the NAP data in the source code. Submit your business to web directories like Yelp. Customers encouraged to provide reviews on your website.

Practical advice Make use of social media to market your material. Make certain you’re speaking to a suitable audience. Only post information that also interests your target demographic. Speak the language of your target audience in your postings. Post on a regular basis, and don’t be afraid to experiment with the most popular topics. To attract a wider audience, use sponsored advertisements on pieces that are particularly engaging. Use a preview to see how your posts will look. Make your postings more appealing and intriguing by using photos and videos.

Day 27 – Clean UI

Make the user interact. User-generated material may improve a website’s relevancy and freshness. Users can engage with your website in a variety of ways. Blog comments, question and answer sections on items, and reviews are the most prevalent methods! Encourage your blog’s visitors to leave comments on your content. Simply include a call to action at the end of the text or ask open-ended questions. Write blogs regarding contentious issues to elicit feedback. Encourage readers to remark on your blog pieces by sharing them on social media. Allow users to rate and review your items using open text boxes.

Allow your users to respond to inquiries about your items from other users. Most commonly asked questions on your website, and then respond to them. It’s not about doing one-time steps when it comes to SEO monitoring. It’s a never-ending process of improvement. Valid data regarding user behavior is one of the most important foundations for optimization. Regular monitoring is one of the most important aspects of search engine optimization since it allows you to respond swiftly to changes in traffic or other events.

Day 28 – Google Search Console

Create an account with Google Search Console. The Google Search Console is an important tool for keeping track of your website’s performance. Not only is the sitemap.XML submitted to the Search Console. But you also get useful information about the most commonly searched terms on Google. In addition, the Search Console warns you about compromised websites and artificial connections. You may also link other Google products, such as Google Analytics and Google AdWords, to the Search Console to get data from them.

You may also include CTR and traffic statistics, as well as links to other web analytics tools, using the API. Create a Google account and enter your website’s information in the Search Console. Check the Search Console for HTML improvements on a regular basis, and optimize your metadata. Use the Search Console to double-check your markups. Analyze your landing page clicks and utilize the information to improve your content and metadata. Take a look at the data on crawling mistakes. Using the “Fetch as Google” capability, deliver optimized webpages directly to the Google index.

Day 29 – Google Analytics

Enroll in Google Analytics. Google Analytics allows you to examine user activity on your website in detail. The functions available for analysis vary from simple to complicated. Evaluation of the efficacy of your SEO efforts requires the use of technologies such as Google Analytics.

Google Analytics keeps track of how many people visit your site. To enable tracking, place the appropriate Google Analytics code snippet on each page. On a daily basis, review the most significant performance metrics (such as page views, bounce rate, and dwell time). Enable email notifications in the event of significant changes in these KPIs. Compare the data to earlier periods on a regular basis. If you’re using Google Analytics, make sure your privacy policies are up to date.

Day 30 – Progress Monitoring

Keep track of your progress. There are several techniques available for evaluating the effectiveness of your website. Use the free features to monitor and analyze traffic on your website as well. Google Analytics and Google Search Console are typical website monitoring tools that are both free. Other free tools are also available from various commercial suppliers that can greatly assist you with your SEO efforts. What an adventure! You’ve been gradually optimizing your website over the last 30 days by using various SEO strategies on your page. Tips on technical, on-page, content, and performance optimization were all discussed. We’ve set the groundwork for a successful website. You’re on your path to becoming an SEO master! But keep in mind that SEO is a long-term commitment, not a one-time “fling.” Keep up the fantastic work and keep your page updated and optimized. You’ll see — your efforts will be rewarded! Good Luck.

Conclusion

We hope this guide helped you in getting SEO Traffic in 30 days. We tried our best to keep it simple as we can. If you find it helpful then let us know in the comment section down below